Provision

Input Parameters for Provision Tool

Fill in all provision-specific parameters in input/provision_config.yml

Name |

Default, Accepted Values |

Required? |

Additional Information |

|---|---|---|---|

public_nic |

eno2 |

required |

The NIC/ethernet card that is connected to the public internet. |

admin_nic |

eno1 |

required |

The NIC/ethernet card that is used for shared LAN over Management (LOM) capability. |

admin_nic_subnet |

10.5.0.0 |

required |

The intended subnet for shared LOM capability. Note that since the last 16 bits/2 octets of IPv4 are dynamic, please ensure that the parameter value is set to x.x.0.0. |

pxe_nic |

eno1 |

required |

This NIC used to obtain routing information. |

discovery_mechanism |

mapping, bmc, snmp |

required |

Indicates the mechanism through which omnia will discover nodes for provisioning. mapping indicates that the user has provided a valid mapping file path with details regarding MAC ID of the NIC, IP address and hostname. bmc indicates the servers in the cluster will be discovered by Omnia using BMC. The requirement in this case is user should enable IPMI over LAN in iDRAC settings if the iDRACs are in static mode. snmp indicates Omnia will discover the nodes based on the switch IP (to which the cluster servers are connected) provided. SNMP should be enabled on the switch. |

pxe_mapping_file_path |

optional |

The mapping file consists of the MAC address and its respective IP address and hostname. If static IPs are required, create a csv file in the format MAC,Hostname,IP. A sample file is provided here: examples/pxe_mapping_file.csv. If not provided, ensure that |

|

bmc_nic_subnet |

10.3.0.0 |

optional |

If provided, Omnia will assign static IPs to IB NICs on the compute nodes within the provided subnet. Note that since the last 16 bits/2 octets of IPv4 are dynamic, please ensure that the parameter value is set to x.x.0.0. When the PXE range and BMC subnet are provided, corresponding NICs will be assigned IPs with the same 3rd and 4th octets. |

pxe_switch_ip |

optional |

PXE switch that will be connected to all iDRACs for provisioning. This switch needs to be SNMP-enabled. |

|

pxe_switch_snmp_community_string |

public |

optional |

The SNMP community string used to access statistics, MAC addresses and IPs stored within a router or other device. |

bmc_static_start_range |

optional |

The start of the IP range for iDRACs in static mode. Ex: 172.20.0.50 - 172.20.1.101 is a valid range however, 172.20.0.101 - 172.20.1.50 is not. |

|

bmc_static_end_range |

optional |

The end of the IP range for iDRACs in static mode. Note: To create a meaningful range of discovery, ensure that the last two octets of |

|

bmc_username |

optional |

The username for iDRAC. The username must not contain -,, ‘,”. Required only if iDRAC_support: true and the discovery mechanism is BMC. |

|

bmc_password |

optional |

The password for iDRAC. The username must not contain -,, ‘,”. Required only if iDRAC_support: true and the discovery mechanism is BMC. |

|

pxe_subnet |

10.5.0.0 |

optional |

The pxe subnet details should be provided. This is required only when discovery mechanism is BMC. For mapping and snmp based discovery provide the |

ib_nic_subnet |

optional |

Infiniband IP range used to assign IPv4 addresses. When the PXE range and BMC subnet are provided, corresponding NICs will be assigned IPs with the same 3rd and 4th octets. |

|

node_name |

node |

required |

The intended node name for nodes in the cluster. |

domain_name |

required |

DNS domain name to be set for iDRAC. |

|

provision_os |

rocky, rhel |

required |

The operating system image that will be used for provisioning compute nodes in the cluster. |

provision_os_version |

8.6, 8.0, 8.1, 8.2, 8.3, 8.4, 8.5, 8.7 |

required |

OS version of provision_os to be installed |

iso_file_path |

/home/RHEL-8.6.0-20220420.3-x86_64-dvd1.iso |

required |

The path where the user places the ISO image that needs to be provisioned in target nodes. The iso file should be Rocky8-DVD or RHEL-8.x-DVD. |

timezone |

GMT |

required |

The timezone that will be set during provisioning of OS. Available timezones are provided in provision/roles/xcat/files/timezone.txt. |

language |

en-US |

required |

The language that will be set during provisioning of the OS |

default_lease_time |

86400 |

required |

Default lease time in seconds that will be used by DHCP. |

provision_password |

required |

Password used while deploying OS on bare metal servers. The Length of the password should be at least 8 characters. The password must not contain -,, ‘,”. |

|

postgresdb_password |

required |

Password used to authenticate into the PostGresDB used by xCAT. Only alphanumeric characters (no special characters) are accepted. |

|

primary_dns |

optional |

The primary DNS host IP queried to provide Internet access to Compute Node (through DHCP routing) |

|

secondary_dns |

optional |

The secondary DNS host IP queried to provide Internet access to Compute Node (through DHCP routing) |

|

disk_partition |

|

optional |

User defined disk partition applied to remote servers. The disk partition desired_capacity has to be provided in MB. Valid mount_point values accepted for disk partition are /home, /var, /tmp, /usr, swap. Default partition size provided for /boot is 1024MB, /boot/efi is 256MB and the remaining space to / partition. Values are accepted in the form of JSON list such as: , - { mount_point: “/home”, desired_capacity: “102400” } |

mlnx_ofed_path |

optional |

Absolute path to a local copy of the .iso file containing Mellanox OFED packages. The image can be downloaded from https://network.nvidia.com/products/infiniband-drivers/linux/mlnx_ofed/. Sample value: |

|

cuda_toolkit_path |

optional |

Absolute path to local copy of .rpm file containing CUDA packages. The cuda rpm can be downloaded from https://developer.nvidia.com/cuda-downloads. CUDA will be installed post provisioning without any user intervention. Eg: cuda_toolkit_path: “/root/cuda-repo-rhel8-12-0-local-12.0.0_525.60.13-1.x86_64.rpm” |

Warning

The IP address 192.168.25.x is used for PowerVault Storage communications. Therefore, do not use this IP address for other configurations.

The IP range x.y.246.1 - x.y.255.253 (where x and y are provided by the first two octets of

bmc_nic_subnet) are reserved by Omnia.

Before You Run The Provision Tool

(Recommended) Run

prereq.shto get the system ready to deploy Omnia. Alternatively, ensure that Ansible 2.12.9 and Python 3.8 are installed on the system. SELinux should also be disabled.Set the hostname of the control plane using the

hostname.domain nameformat. Create an entry in the/etc/hostsfile on the control plane.- Hostname requirements

In the

examplesfolder, a mapping_host_file.csv template is provided which can be used for DHCP configuration. The header in the template file must not be deleted before saving the file. It is recommended to provide this optional file as it allows IP assignments provided by Omnia to be persistent across control plane reboots.The Hostname should not contain the following characters: , (comma), . (period) or _ (underscore). However, the domain name is allowed commas and periods.

The Hostname cannot start or end with a hyphen (-).

No upper case characters are allowed in the hostname.

The hostname cannot start with a number.

The hostname and the domain name (that is:

hostname00000x.domain.xxx) cumulatively cannot exceed 64 characters. For example, if thenode_nameprovided ininput/provision_config.ymlis ‘node’, and thedomain_nameprovided is ‘omnia.test’, Omnia will set the hostname of a target compute node to ‘node00001.omnia.test’. Omnia appends 6 digits to the hostname to individually name each target node.

For example,

controlplane.omnia.testis acceptable.

Note

The domain name specified for the control plane should be the same as the one specified under domain_name in input/provision_config.rst.

To provision the bare metal servers, download one of the following ISOs for deployment:

To dictate IP address/MAC mapping, a host mapping file can be provided. Use the pxe_mapping_file.csv to create your own mapping file.

Ensure that all connection names under the network manager match their corresponding device names.

nmcli connection

In the event of a mismatch, edit the file /etc/sysconfig/network-scripts/ifcfg-<nic name> using vi editor.

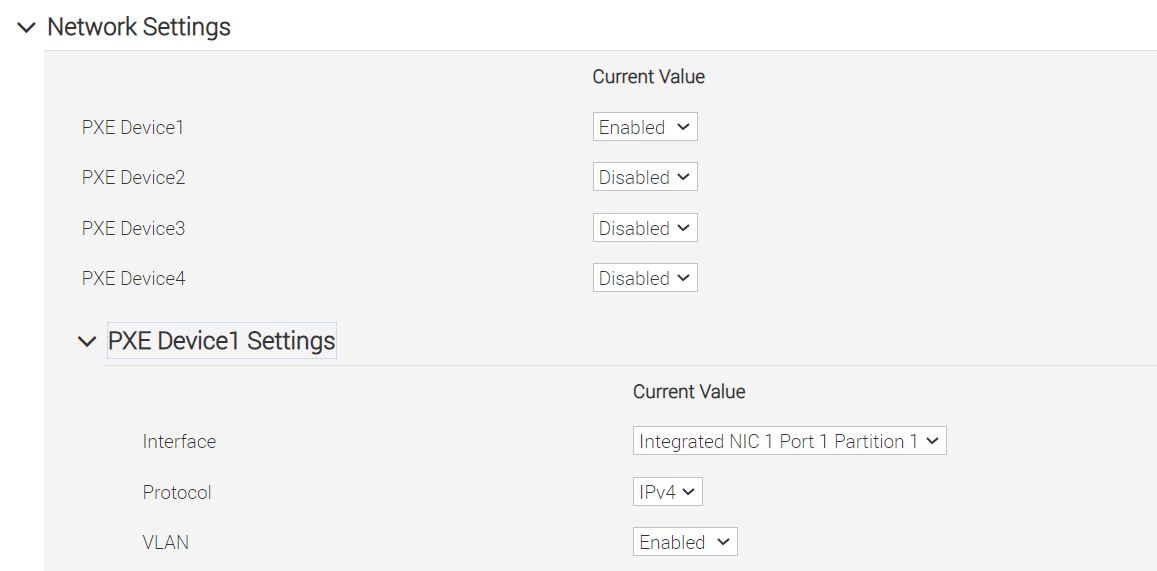

When discovering nodes via SNMP or a mapping file, all target nodes should be set up in PXE mode before running the playbook.

If RHEL is in use on the control plane, enable RedHat subscription. Not only does Omnia not enable RedHat subscription on the control plane, package installation may fail if RedHat subscription is disabled.

Users should also ensure that all repos are available on the RHEL control plane.

Ensure that the

pxe_nicandpublic_nicare in the firewalld zone: public.The control plane NIC connected to remote servers (through the switch) should be configured with two IPs in a shared LOM set up. This NIC is configured by Omnia with the IP xx.yy.255.254, aa.bb.255.254 (where xx.yy are taken from

bmc_nic_subnetand aa.bb are taken fromadmin_nic_subnet) whendiscovery_mechanismis set tobmc. For other discovery mechanisms, only the admin NIC is configured with aa.bb.255.254 (Where aa.bb is taken fromadmin_nic_subnet).

Provisioning the cluster

Edit the

input/provision_config.ymlfile to update the required variables.

Note

The first PXE device on target nodes should be the designated active NIC for PXE booting.

To deploy the Omnia provision tool, run the following command

cd provision ansible-playbook provision.yml

By running

provision.yml, the following configurations take place:

All compute nodes in cluster will be enabled for PXE boot with osimage mentioned in

provision_config.yml.A PostgreSQL database is set up with all relevant cluster information such as MAC IDs, hostname, admin IP, infiniband IPs, BMC IPs etc.

To access the DB, run:

psql -U postgres \c omniadb

To view the schema being used in the cluster:

\dnTo view the tables in the database:

\dtTo view the contents of the

nodeinfotable:select * from cluster.nodeinfo;id | serial | node | hostname | admin_mac | admin_ip | bmc_ip | ib_ip | status | bmc_mode ----+--------+-----------+---------------------+-------------------+--------------+--------------+-------------+--------+---------- 1 | XXXXXXX | node00001 | node00001.omnia.test | ec:2a:72:32:c6:98 | 10.5.0.111 | 10.3.0.111 | 10.10.0.111 | powering-on | static 2 | XXXXXXX | node00002 | node00002.omnia.test | f4:02:70:b8:cc:80 | 10.5.0.112 | 10.3.0.112 | 10.10.0.112 | booted | dhcp 3 | XXXXXXX | node00003 | node00003.omnia.test | 70:b5:e8:d1:19:b6 | 10.5.0.113 | 10.3.0.113 | 10.10.0.113 | post-booting | static 4 | XXXXXXX | node00004 | node00004.omnia.test | b0:7b:25:dd:e8:4a | 10.5.0.114 | 10.3.0.114 | 10.10.0.114 | booted | static 5 | XXXXXXX | node00005 | node00005.omnia.test | f4:02:70:b8:bc:2a | 10.5.0.115 | 10.3.0.115 | 10.10.0.115 | booted | static

Possible values of status are static, powering-on, installing, bmcready, booting, post-booting, booted, failed. The status will be updated every 3 minutes.

Note

For nodes listing status as ‘failed’, provisioning logs can be viewed in /var/log/xcat/xcat.log on the target nodes.

Offline repositories will be created based on the OS being deployed across the cluster.

The xCAT post bootscript is configured to assign the hostname (with domain name) on the provisioned servers.

Once the playbook execution is complete, ensure that PXE boot and RAID configurations are set up on remote nodes. Users are then expected to reboot target servers discovered via SNMP or mapping to provision the OS.

Note

If the cluster does not have access to the internet, AppStream will not function. To provide internet access through the control plane (via the PXE network NIC), update

primary_dnsandsecondary_dnsinprovision_config.ymland runprovision.ymlAll ports required for xCAT to run will be opened (For a complete list, check out the Security Configuration Document).

After running

provision.yml, the fileinput/provision_config.ymlwill be encrypted. To edit the file, use the command:ansible-vault edit provision_config.yml --vault-password-file .provision_vault_keyTo re-provision target servers

provision.ymlcan be re-run with a new inventory file that contains a list of admin (PXE) IPs. For more information, click herePost execution of

provision.yml, IPs/hostnames cannot be re-assigned by changing the mapping file. However, the addition of new nodes is supported as explained below.Once the cluster is provisioned, enable RedHat subscription on all RHEL target nodes to ensure smooth execution of Omnia playbooks to configure the cluster with Slurm, Kubernetes.

Warning

Once xCAT is installed, restart your SSH session to the control plane to ensure that the newly set up environment variables come into effect.

To avoid breaking the passwordless SSH channel on the control plane, do not run

ssh-keygencommands post execution ofprovision.yml.

Installing CUDA

Using the provision tool

If

cuda_toolkit_pathis provided ininput/provision_config.ymland NVIDIA GPUs are available on the target nodes, CUDA packages will be deployed post provisioning without user intervention.

Using the Accelerator playbook

CUDA can also be installed using accelerator.yml after provisioning the servers (Assuming the provision tool did not install CUDA packages).

Note

The CUDA package can be downloaded from here

Installing OFED

Using the provision tool

If

mlnx_ofed_pathis provided ininput/provision_config.ymland Mellanox NICs are available on the target nodes, OFED packages will be deployed post provisioning without user intervention.

Using the Network playbook

OFED can also be installed using network.yml after provisioning the servers (Assuming the provision tool did not install OFED packages).

Note

The OFED package can be downloaded from here .

Assigning infiniband IPs

When ib_nic_subnet is provided in input/provision_config.yml, the infiniband NIC on target nodes are assigned IPv4 addresses within the subnet without user intervention. When PXE range and Infiniband subnet are provided, the infiniband NICs will be assigned IPs with the same 3rd and 4th octets as the PXE NIC.

For example on a target node, when the PXE NIC is assigned 10.5.0.101, and the Infiniband NIC is assigned 10.10.0.101 (where

ib_nic_subnetis 10.10.0.0).

Note

The IP is assigned to the interface ib0 on target nodes only if the interface is present in active mode. If no such NIC interface is found, xCAT will list the status of the node object as failed.

Assigning BMC IPs

When target nodes are discovered via SNMP or mapping files (ie discovery_mechanism is set to snmp or mapping in input/provision_config.yml), the bmc_nic_subnet in input/provision_config.yml can be used to assign BMC IPs to iDRAC without user intervention. When PXE range and BMC subnet are provided, the iDRAC NICs will be assigned IPs with the same 3rd and 4th octets as the PXE NIC.

For example on a target node, when the PXE NIC is assigned 10.5.0.101, and the iDRAC NIC is assigned 10.3.0.101 (where

bmc_nic_subnetis 10.3.0.0).

Using multiple versions of a given OS

Omnia now supports deploying different versions of the same OS. With each run of provision.yml, a new deployable OS image is created with a distinct type (rocky or RHEL) and version (8.0, 8.1, 8.2, 8.3, 8.4, 8.5, 8.6, 8.7) depending on the values provided in input/provision_config.yml.

Note

While Omnia deploys the minimal version of the OS, the multiple version feature requires that the Rocky full (DVD) version of the OS be provided.

DHCP routing for internet access

Omnia now supports DHCP routing via the control plane. To enable routing, update the primary_dns and secondary_dns in input/provision_config.yml with the appropriate IPs (hostnames are currently not supported). For compute nodes that are not directly connected to the internet (ie only PXE network is configured), this configuration allows for internet connectivity.

Disk partitioning

Omnia now allows for customization of disk partitions applied to remote servers. The disk partition desired_capacity has to be provided in MB. Valid mount_point values accepted for disk partition are /home, /var, /tmp, /usr, swap. Default partition size provided for /boot is 1024MB, /boot/efi is 256MB and the remaining space to / partition. Values are accepted in the form of JSON list such as:

disk_partition:

- { mount_point: "/home", desired_capacity: "102400" }

- { mount_point: "swap", desired_capacity: "10240" }

After Running the Provision Tool

Once the servers are provisioned, run the post provision script to:

Create

node_inventoryin/opt/omnialisting provisioned nodes.cat /opt/omnia/node_inventory 10.5.0.100 service_tag=XXXXXXX operating_system=RedHat 10.5.0.101 service_tag=XXXXXXX operating_system=RedHat 10.5.0.102 service_tag=XXXXXXX operating_system=Rocky 10.5.0.103 service_tag=XXXXXXX operating_system=Rocky

To run the script, use the below command::

ansible-playbook post_provision.yml