Frequently asked questions

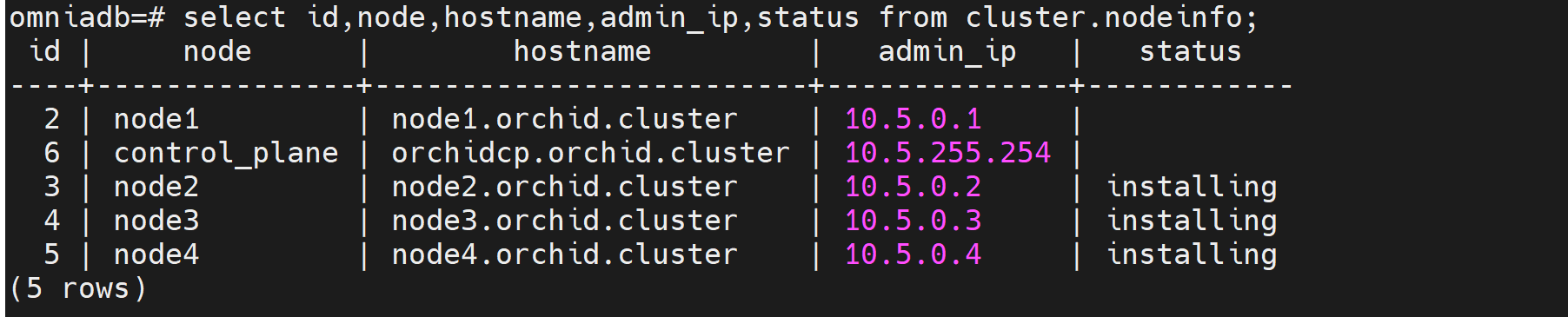

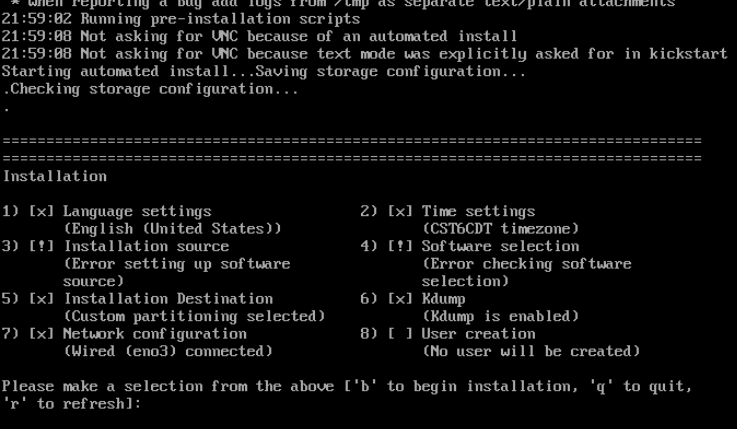

⦾ Why is the provisioning status of the target servers stuck at ‘installing’ in cluster.nodeinfo (omniadb)?

Potential Causes:

Disk partition may not have enough storage space per the requirements specified in

input/provision_config(underdisk_partition)The provided ISO may be corrupt/incomplete.

Hardware issues (Auto reboot may fail at POST)

A virtual disk may not have been created

Resolution:

Add more space to the server or modify the requirements specified in

input/provision_config(underdisk_partition)Download the ISO again, verify the checksum/ download size and re-run the provision tool.

Resolve/replace the faulty hardware and PXE boot the node.

Create a virtual disk and PXE boot the node.

⦾ Why is the provisioning status of my target servers stuck at ‘powering-on’ in the cluster.info (omniadb)?

Potential Cause:

Hardware issues (Auto-reboot may fail due to hardware tests failing)

The target node may already have an OS and the first boot PXE device is not configured correctly.

Resolution:

Resolve/replace the faulty hardware and PXE boot the node.

Target servers should be configured to boot in PXE mode with the appropriate NIC as the first boot device.

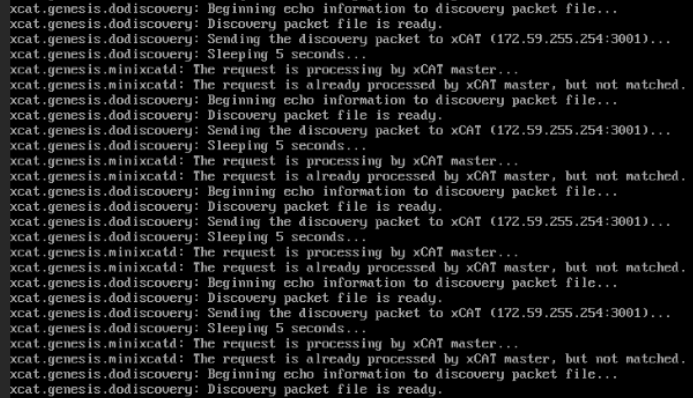

⦾ What to do if PXE boot fails while discovering target nodes via switch_based discovery with provisioning status stuck at ‘powering-on’ in cluster.nodeinfo (omniadb):

Rectify any probable causes like incorrect/unavailable credentials (

switch_snmp3_usernameandswitch_snmp3_passwordprovided ininput/provision_config.yml), network glitches, having multiple NICs with the same IP address as the control plane, or incorrect switch IP/port details.Run the clean up script by:

cd utils ansible-playbook control_plane_cleanup.yml

Re-run the provision tool (

ansible-playbook discovery_provision.yml).

⦾ What to do if playbook execution fails due to external (network, hardware etc) failure:

Re-run the playbook whose execution failed once the issue is resolved.

⦾ Why don’t IPA commands work after setting up FreeIPA on the cluster?

Potential Cause:

Kerberos authentication may be missing on the target node.

Resolution:

Run

kinit adminon the node and provide thekerberos_admin_passwordwhen prompted. (This password is also entered ininput/security_config.yml.)

⦾ Why am I unable to login using LDAP credentials after successfully creating a user account?

Potential Cause:

Whitespaces in the LDIF file may have caused an encryption error. Verify whether there are any whitespaces in the file by running

cat -vet <filename>.Resolution:

Remove the whitespaces and re-run the LDIF file.

⦾ Why are the status and admin_mac fields not populated for specific target nodes in the cluster.nodeinfo table?

Causes:

Nodes do not have their first PXE device set as designated active NIC for PXE booting.

Nodes that have been discovered via multiple discovery mechanisms may list multiple times. Duplicate node entries will not list MAC addresses.

Resolution:

Configure the first PXE device to be active for PXE booting.

PXE boot the target node manually.

Duplicate node objects (identified by service tag) will be deleted automatically. To manually delete node objects, use

utils/delete_node.yml.

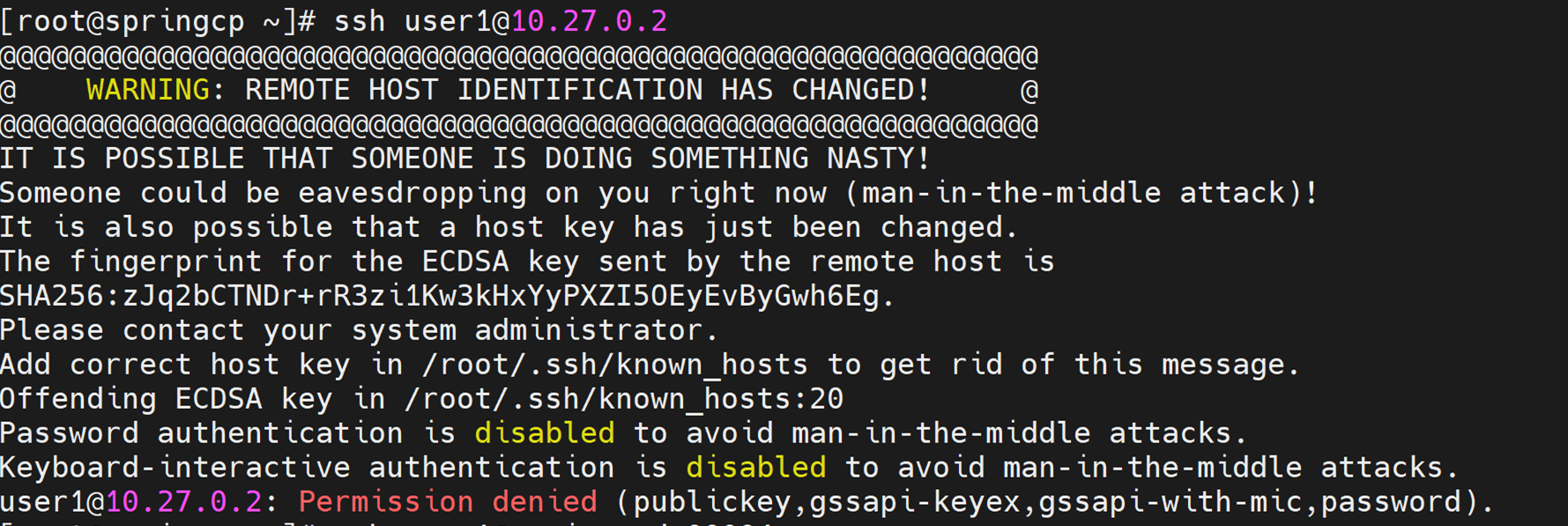

⦾ What to do if user login fails when accessing a cluster node:

- Potential Cause:

ssh key on the control plane may be outdated.

Resolution:

Refresh the key using

ssh-keygen -R <hostname/server IP>.Retry login.

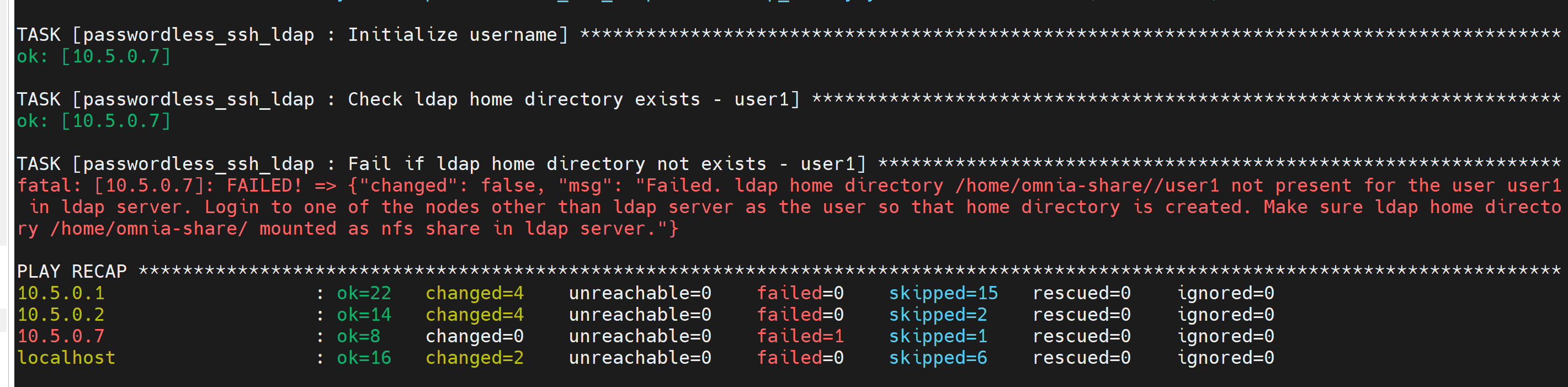

⦾ Why does the ‘Fail if LDAP home directory exists’ task fail during user_passwordless_ssh.yml?

Potential Cause: The required NFS share is not set up on the control plane.

Resolution:

If enable_omnia_nfs is true in input/omnia_config.yml, follow the below steps to configure an NFS share on your LDAP server:

From the kube_control_plane:

Add the LDAP server IP address to

/etc/exports.Run

exportfs -rato enable the NFS configuration.From the LDAP server:

Add the required fstab entries in

/etc/fstab(The corresponding entry will be available on the compute nodes in/etc/fstab)Mount the NFS share using

mount manager_ip: /home/omnia-share /home/omnia-share

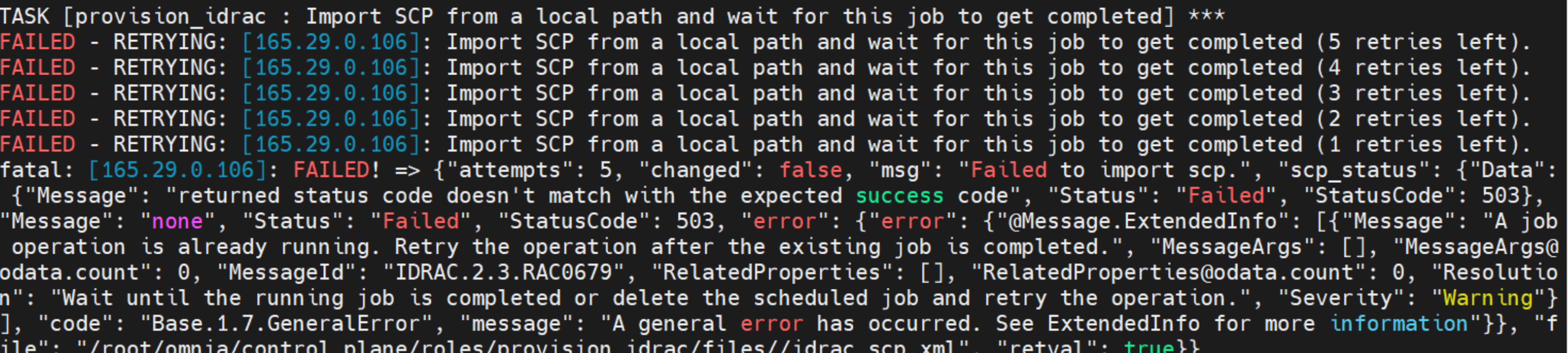

⦾ Why does the ‘Import SCP from a local path’ task fail during idrac.yml?

Potential Cause: The target server may be stalled during the booting process.

Resolution: Bring the target node up and re-run the script.

⦾ Why is the node status stuck at ‘powering-on’ or ‘powering-off’ after a control plane reboot?

Potential Cause: The nodes were powering off or powering on during the control plane reboot/shutdown.

Resolution: In the case of a planned shutdown, ensure that the control plane is shut down after the compute nodes. When powering back up, the control plane should be powered on and xCAT services resumed before bringing up the compute nodes. In short, have the control plane as the first node up and the last node down.

For more information, click here

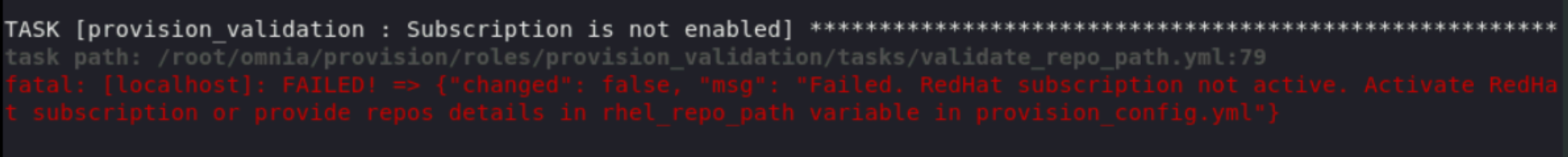

⦾ Why do subscription errors occur on RHEL control planes when rhel_repo_local_path (in input/provision_config.yml) is not provided and control plane does not have an active subscription?

For many of Omnia’s features to work, RHEL control planes need access to the following repositories:

AppStream

BaseOS

This can only be achieved using local repos specified in rhel_repo_local_path (input/provision_config.yml).

Note

To enable the repositories, run the following commands:

subscription-manager repos --enable=codeready-builder-for-rhel-8-x86_64-rpms

subscription-manager repos --enable=rhel-8-for-x86_64-appstream-rpms

subscription-manager repos --enable=rhel-8-for-x86_64-baseos-rpms

Verify your changes by running:

yum repolist enabled

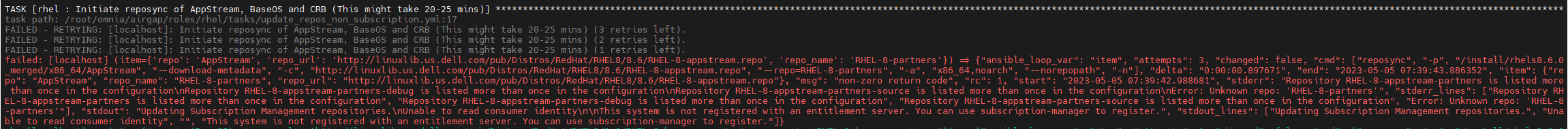

⦾ Why does the task: Initiate reposync of AppStream, BaseOS and CRB fail?

Potential Cause: The repo_url, repo_name or repo provided in rhel_repo_local_path (input/provision_config.yml) may not have been valid.

Omnia does not validate the input of rhel_repo_local_path.

Resolution: Ensure the correct values are passed before re-running discovery_provision.yml.

⦾ How to add a new node for provisioning

Using a mapping file:

Update the existing mapping file by appending the new entry (without the disrupting the older entries) or provide a new mapping file by pointing

pxe_mapping_file_pathinprovision_config.ymlto the new location.Run

discovery_provision.yml.

Using the switch IP:

Run

discovery_provision.ymlonce the switch has discovered the potential new node.

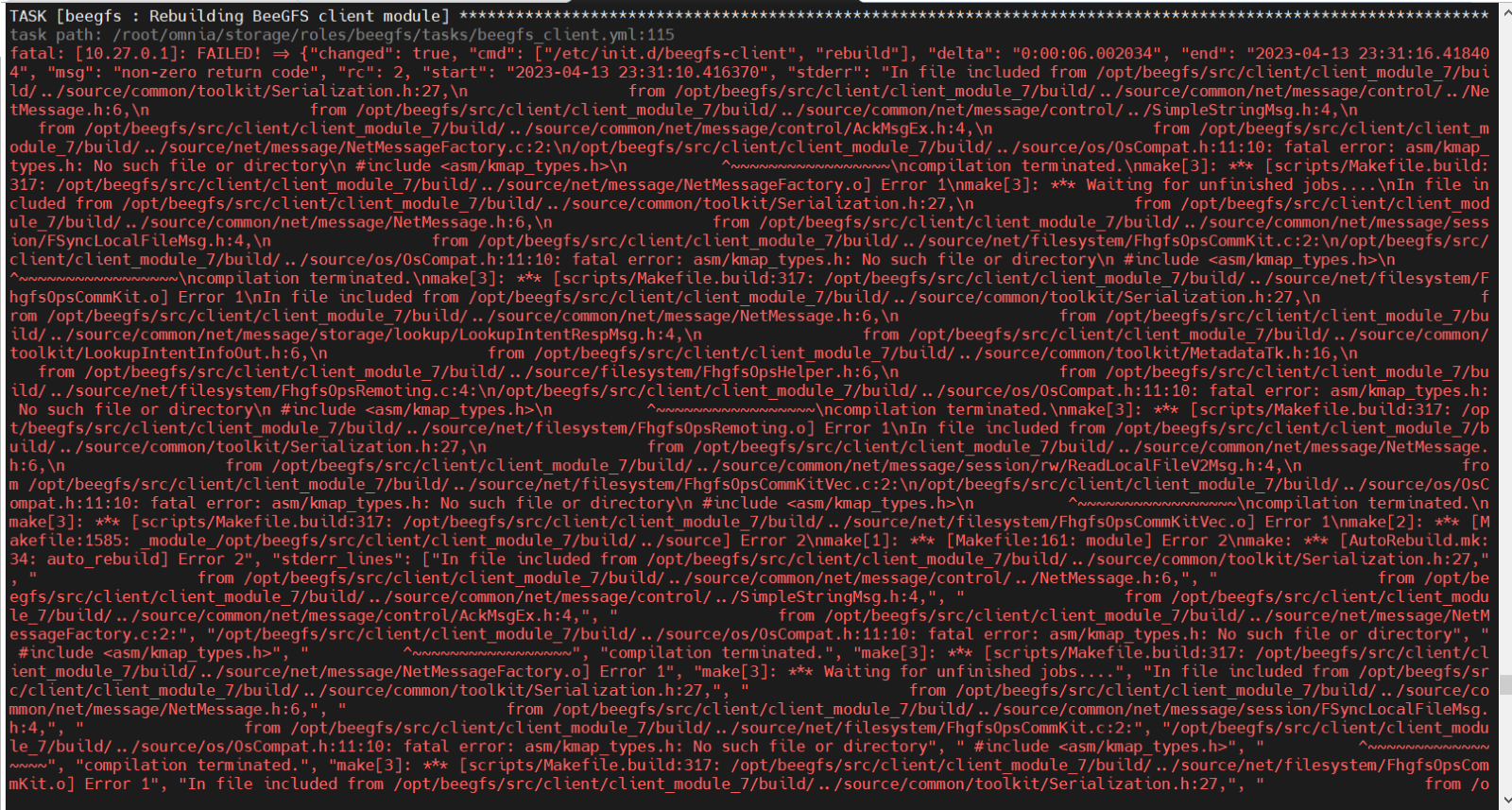

⦾ Why does the task: ‘BeeGFS: Rebuilding BeeGFS client module’ fail?

Potential Cause: BeeGFS version 7.3.0 is in use.

Resolution: Use BeeGFS client version 7.3.1 when setting up BeeGFS on the cluster.

⦾ Why does splitting an ethernet Z series port fail with “Failed. Either port already split with different breakout value or port is not available on ethernet switch”?

Potential Cause:

The port is already split.

It is an even-numbered port.

Resolution:

Changing the

breakout_valueon a split port is currently not supported. Ensure the port is un-split before assigning a newbreakout_value.

⦾ What to do if the LC is not ready:

Verify that the LC is in a ready state for all servers:

racadm getremoteservicesstatusPXE boot the target server.

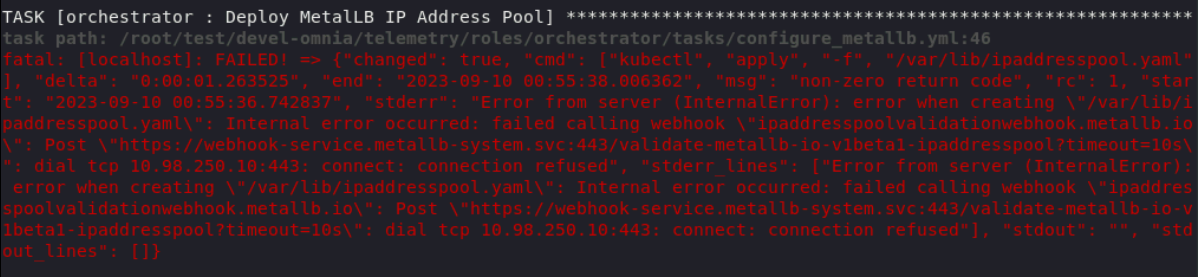

⦾ Why does the task: ‘Orchestrator: Deploy MetalLB IP Address pool’ fail?

Potential Cause: /var partition is full (potentially due to images not being cleared after intel-oneapi images docker images are used to execute benchmarks on the cluster using apptainer support) .

Resolution: Clear the /var partition and retry telemetry.yml.

⦾ Why does the task: [Telemetry]: TASK [grafana : Wait for grafana pod to come to ready state] fail with a timeout error?

Potential Cause: Docker pull limit exceeded.

Resolution: Manually input the username and password to your docker account on the control plane.

⦾ Is provisioning servers using BOSS controller supported by Omnia?

From Omnia 1.2.1, provisioning a server using BOSS controller is supported.

⦾ What are the licenses required when deploying a cluster through Omnia?

While Omnia playbooks are licensed by Apache 2.0, Omnia deploys multiple softwares that are licensed separately by their respective developer communities. For a comprehensive list of software and their licenses, click here .

If you have any feedback about Omnia documentation, please reach out at omnia.readme@dell.com.